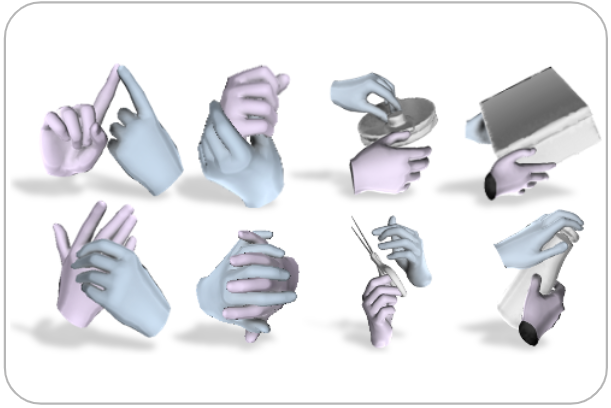

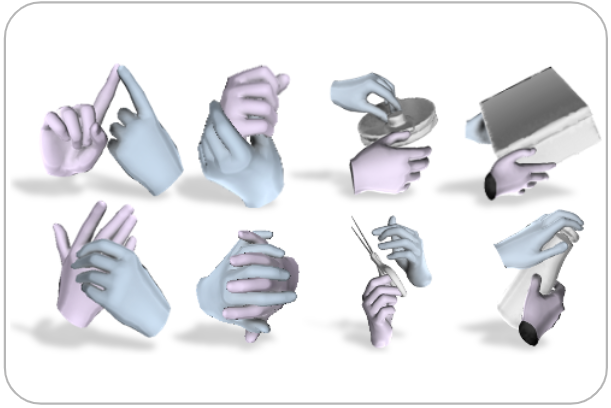

Dense Hand-Object(HO) GraspNet with Full Grasping Taxonomy and Dynamics

Woojin Cho, Jihyun Lee, Minjae Yi, Minje Kim, Taeyun Woo, Donghwan Kim, Taewook Ha, Hyokeun Lee, Je-Hwan Ryu,

Woontack Woo, Tae-Kyun Kim

ECCV 2024

[Project] [Paper] [Code]

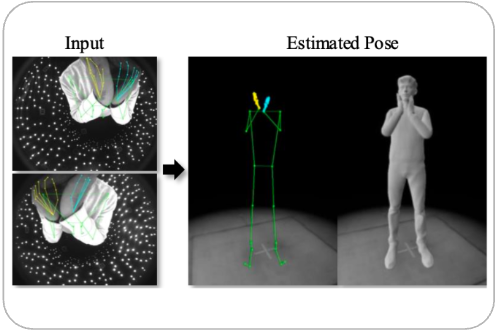

InterHandGen: Two-Hand Interaction Generation via Cascaded Reverse Diffusion

Jihyun Lee, Shunsuke Saito, Giljoo Nam, Minhyuk Sung, Tae-Kyun Kim

CVPR 2024

[Project] [Paper] [Code]

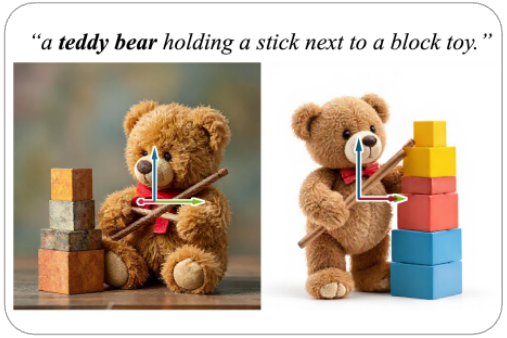

FourierHandFlow: Neural 4D Hand Representation Using Fourier Query Flow

Jihyun Lee, Junbong Jang, Donghwan Kim, Minhyuk Sung, Tae-Kyun Kim

NeurIPS 2023

[Project] [Paper] [Code]

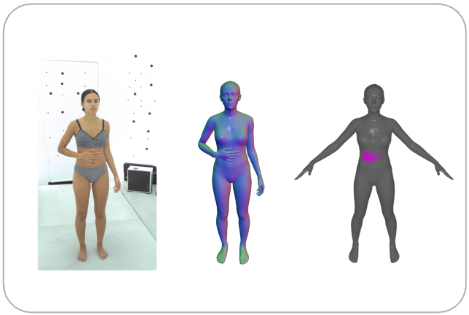

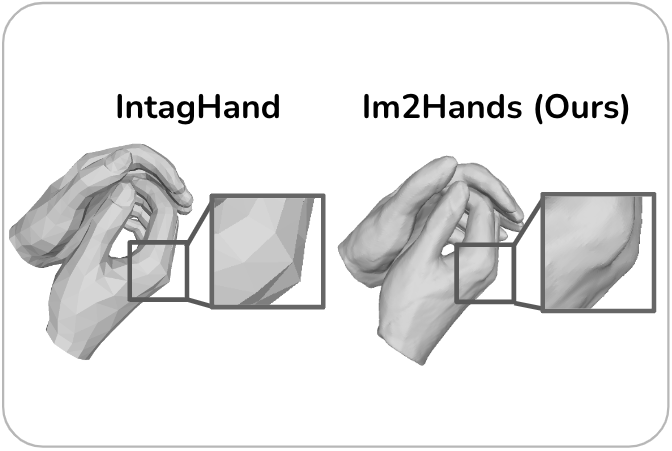

Im2Hands: Learning Attentive Implicit Representation of Interacting Two-Hand Shapes

Jihyun Lee, Minhyuk Sung, Honggyu Choi, Tae-Kyun Kim

CVPR 2023

[Project] [Paper] [Code] [Presentation] [Poster]

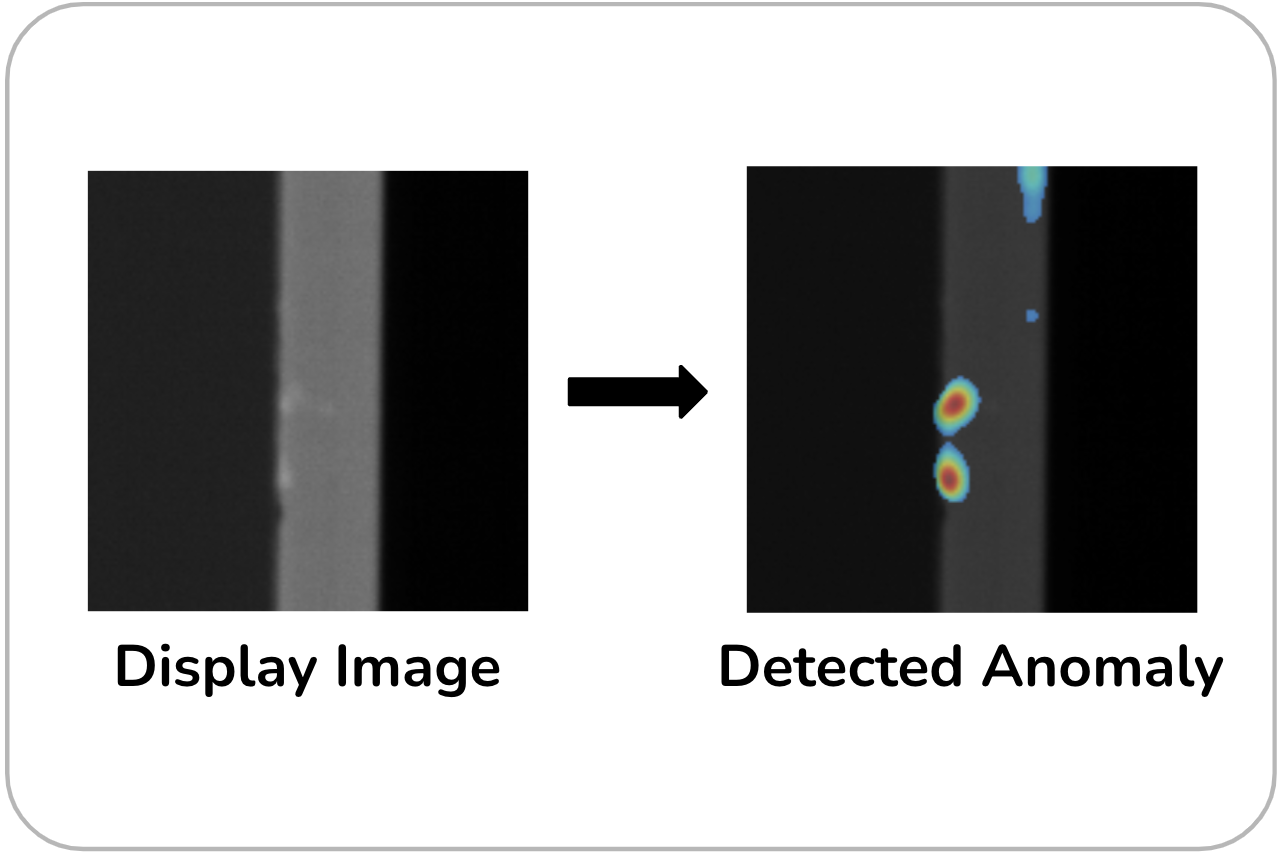

Contrastive Knowledge Distillation for Anomaly Detection in Multi-Illumination/Focus Display Images

Hangil Park*, Jihyun Lee*, Yongmin Seo, Taewon Min, Joodong Yun, Jaewon Kim, Tae-Kyun Kim (* equal contributions)

MVA 2023 (oral)

Writen as a report of an industrial research project with Samsung Display

[Paper]

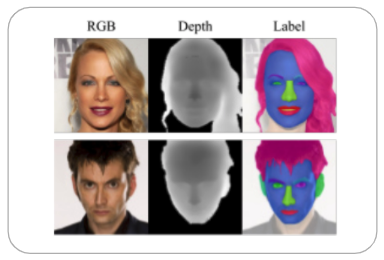

Face Parsing from RGB and Depth Using Cross-Domain Mutual Learning

Jihyun Lee, Binod Bhattarai, Tae-Kyun Kim

CVPRW 2021 (oral) - IEEE AMFG acceptance rate: 27%

[Paper] [Dataset]

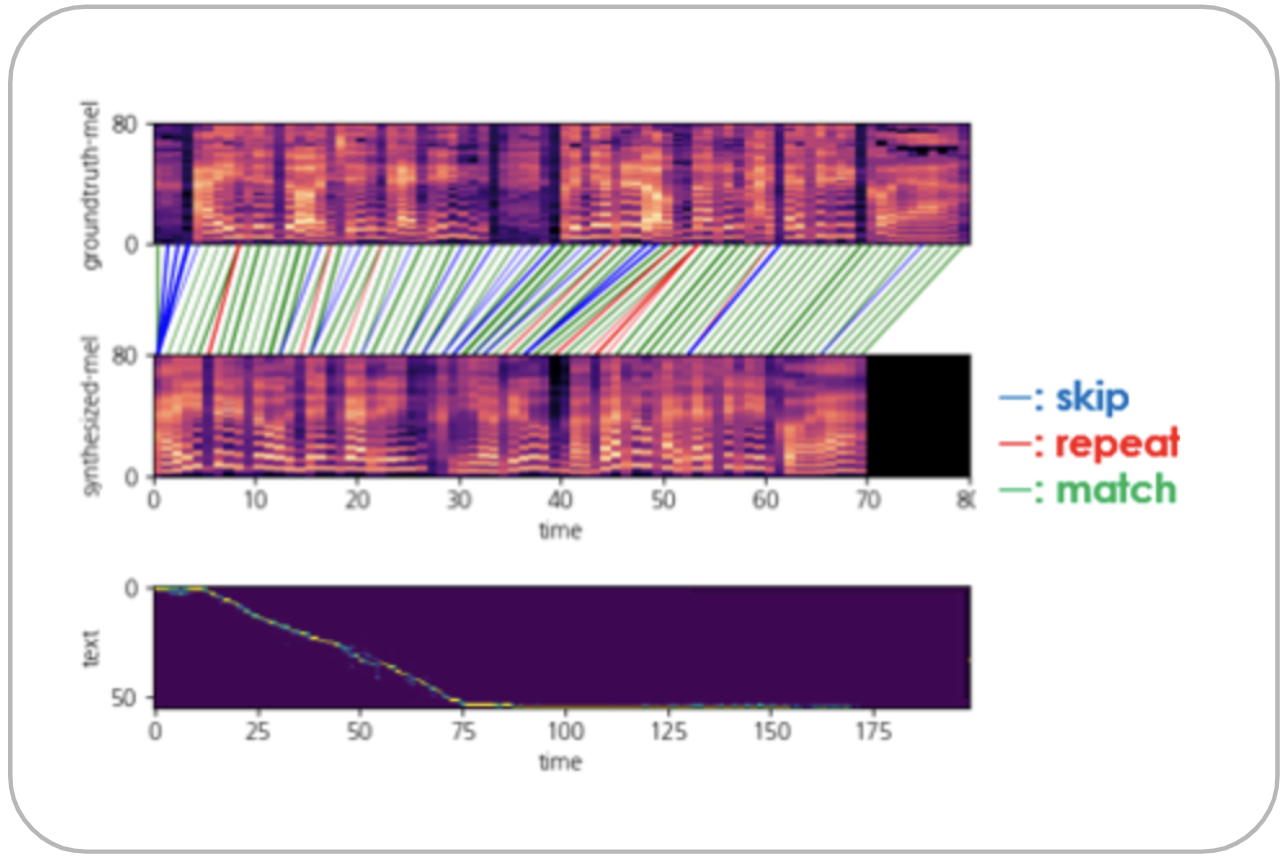

Fast DCTTS: Efficient Deep Convolutional Text-to-Speech

Minsu Kang, Jihyun Lee, Simin Kim, Injung Kim

ICASSP 2021

[Paper]

Awards & Honors

- Outstanding Reviewer 2024, ECCV

- Outstanding TA Award 2022, KAIST

- Awarded to TAs who earned the highest ratings on KAIST CS student survey

- Excellence Award (Mayoral Award) at Korea Software Convergence Hackathon 2019, Ministry of Science and ICT of Korea

- Developed an automatic traffic light control system based on reinforcement learning

- Honorable Mention Award at Undergraduate Student Paper Competition 2019, Korea Software Congress (KSC) and Korea Computer Congress (KCC)

- Proposed a method to reduce the blocking effects of DCT-based X-ray grid line suppression techniques

- Silver Prize at Undergraduate Student Paper Competition 2018, Korea Information Processing Society (KIPS)

- Proposed a DCT-based X-ray grid line suppression method using dynamic segmentation

Academic Activities

Reviewer

- CVPR, CVPR Workshops

- NeurIPS

- ICCV

- ECCV

- ICML

- AAAI

- IJCV

- Pacific Graphics

- Image and Vision Computing (Elsevier Journal)

Student Organizer

Teaching Experiences

- Teaching Assistant, Artificial Intelligence and Machine Learning, 2021-2023, KAIST

- Teaching Assistant, Machine Learning for Computer Vision, 2021-2022, KAIST

- Teaching Assistant, Operating System, 2020, Handong Global University

- Teaching Assistant, C++ Programming, 2019, Handong Global University

- Teaching Assistant, Data Structure, 2018, Handong Global University